clickhouse oom 问题描述

sql: Select domain As domain, ipVersion As ipVersion, ip As ip, serverRoomId As serverRoomId, Sum(visitCount) As visitCount From drms.domain_visit_count_list_dis Where day = '2019-12-08' group by domain, ipVersion, ip, serverRoomId order by visitCount desc limit 100 报错: Code: 241. DB::Exception: Received from dnsr02.dfs.lycc.qihoo.net:9000. DB::Exception: Memory limit (total) exceeded: would use 55.88 GiB (attempt to allocate chunk of 8388208 bytes), maximum: 55.88 GiB.

根据执行日志可以看到在当前执行的机器的内存可以看到使用的内存越来越多,当数据超过一定水平之后就会抛出改错。查询文档可以看到max_bytes_before_external_sort但是设置完成后发现不生效。深入https://clickhouse.yandex/docs/en/query_language/select/#select-order-by 发现内存溢出根本不是order by 的问题而是在group by 之前汇聚数据数据量超过内存只有采用堆外排序方能避免这个问题。

set max_bytes_before_external_group_by=30000000000; 堆外排序

set distributed_aggregation_memory_efficient=1; 减少distribute远程抓取数据group by 用的内存

可以看到明显差距不再报内存溢出的错误也能跑出结果,错误解决。

2 clickhouse zookeeper client session time out

zookeeper client session timout 的状况大部分是由于zookeeper的设置不当造成的,在jvm 长时间gc,zookeeper重新选举节点的时候也会出现类似的情况。处理的办法有两个:

1 zookeeper 需要单独安装在不同的机器上,且数据单独存储在一个盘上避免io打满的状况,同时zookeeper的启动参数设置要参考

https://clickhouse.yandex/docs/en/operations/tips/#zookeeper

2 建表的相关引擎上要尽量减小配置文件在zookeeper上的节点的文件,否则zookeeper上的节点过多会频繁引起jvm的波动在mergetree上设置https://clickhouse.yandex/docs/en/operations/server_settings/settings/#server-settings-use_minimalistic_part_header_in_zookeeper

3 在写入数据的时候进行间隔重试

3 clickhouse 宕机后重启 显示 no invalid user clickhouse

解决办法:

1 )查看原始数据目录显示 /data0/ch 数据目录user 994 group 992

2 )新增clickhouse用户和组

3 )检查配置文件日志文件 数据文件的目录是否恢复为clickhouse

具体执行的语句如下:

sudo groupadd -g 992 clickhouse

sudo useradd -u 994 clickhouse -M -g clickhouse

chown -R clickhouse:clickhouse /var/log/clickhouse-server

chown -R clickhouse:clickhouse /data0/ch-log

chown -R clickhouse:clickhouse /data*/ch

4 简述: 断网之后distribute 表失效,只能读取部分shard的数据,同时读取数据存在merge column 错误。

病症:

症状1:

DB::Exception: Block structure mismatch in MergingSorted stream: different names of columns:

qname String String(size = 65536), qtime_us UInt64 UInt64(size = 65536), client_ip IPv4 UInt32(size = 65536), client_port UInt16 UInt16(size = 65536), qtype UInt8 UInt8(size = 65536), responsor IPv4 UInt32(size = 65536), rname String String(size = 65536), rtype UInt16 UInt16(size = 65536), tid UInt32 UInt32(size = 65536), sid UInt8 UInt8(size = 65536), rdata String String(size = 65536), prevalence Float32 Float32(size = 65536), sld String String(size = 65536)

qtime_us UInt64 UInt64(size = 0), client_ip IPv4 UInt32(size = 0), client_port UInt16 UInt16(size = 0), qname String String(size = 0), qtype UInt8 UInt8(size = 0), responsor IPv4 UInt32(size = 0), rname String String(size = 0), rtype UInt16 UInt16(size = 0), tid UInt32 UInt32(size = 0), sid UInt8 UInt8(size = 0), rdata String String(size = 0), prevalence Float32 Float32(size = 0), sld String String(size = 0). Stack trace: 症状2:

诊断:

- 执行 select * from system.replication_queue 查看表中有多少 last_exception 不为空的表和报错异常 存在Found parts with the same 或No active replica has part 证明shard 内部replication 复制存在异常

治疗:

在所有报错的主机上执行:

- Found parts with the same min block and with the same max block as the missing part 1599618600_0_225_24. Hoping that it will eventually appear as a result of a merge:采取dettache partition 后重新attach partition paritition 的名字就是part中_的第一个字段

- No active replica has part 类似症状处理

- 执行 SELECT replica_path || '/queue/' || node_name FROM system.replication_queue JOIN system.replicas USING (database, table) WHERE create_time < now() - INTERVAL 3 DAY AND type = 'GET_PART' AND last_exception LIKE '%No active replica has part%' 查出所有无效的part queue在zookeeper上的路径

- 删除以上的路径

- 执行

SYSTEM RESTART REPLICAS - 参考https://github.com/ClickHouse/ClickHouse/issues/10368 和https://github.com/ClickHouse/ClickHouse/issues/2755

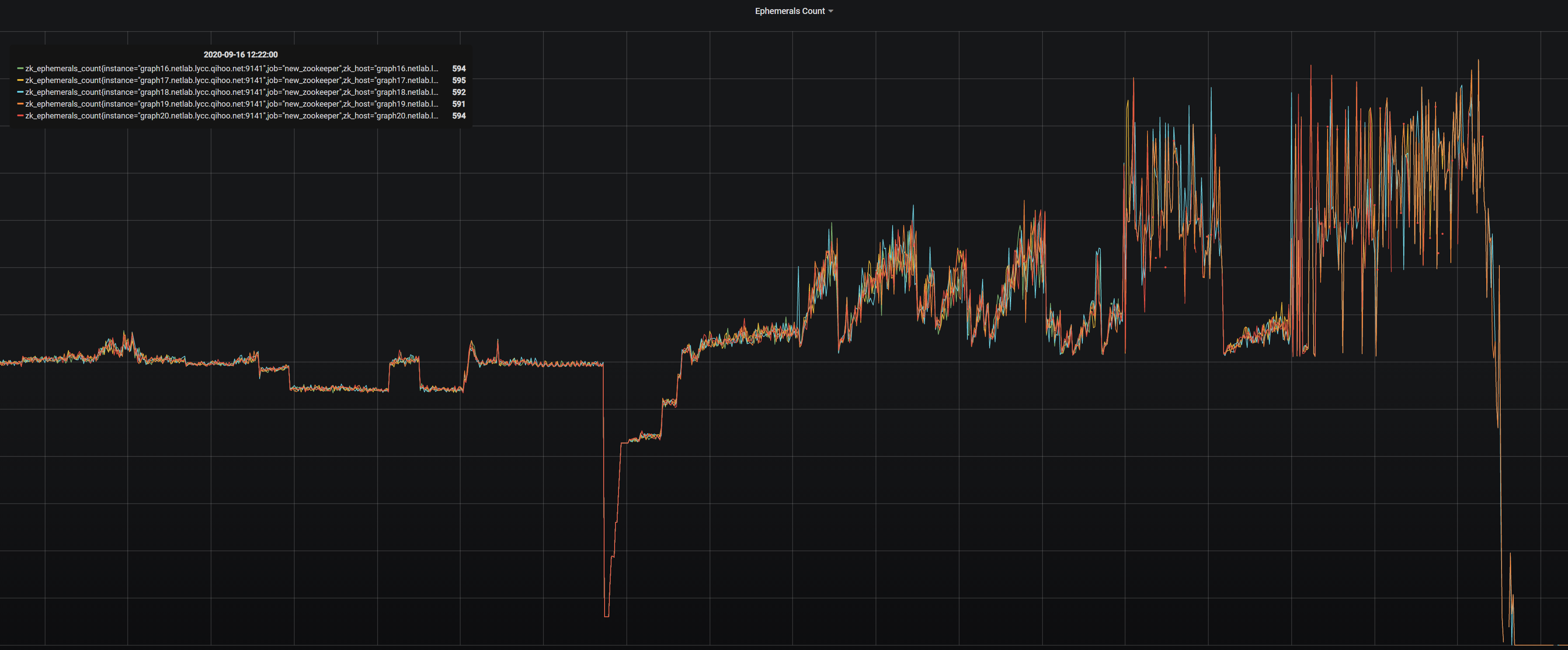

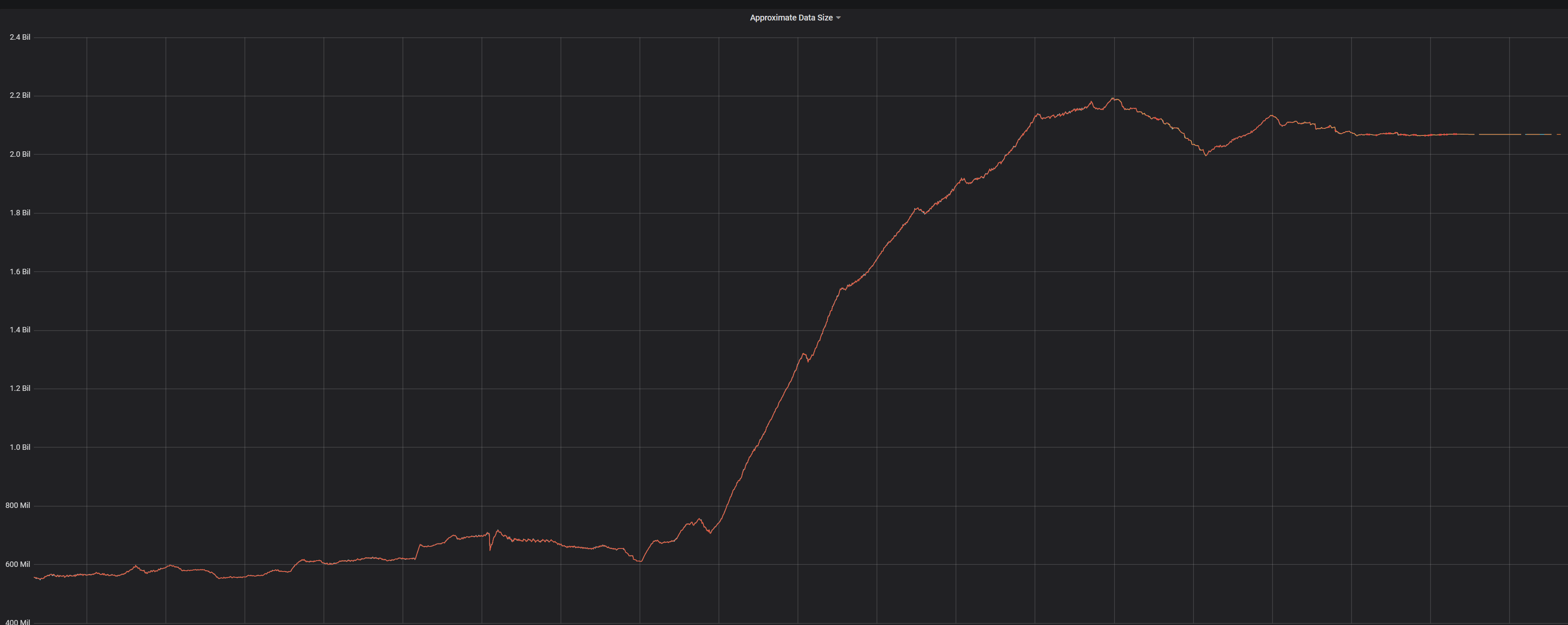

5 简述: zookeeper 早上6点突然挂了,导致整个clickhouse 集群无法正常服务

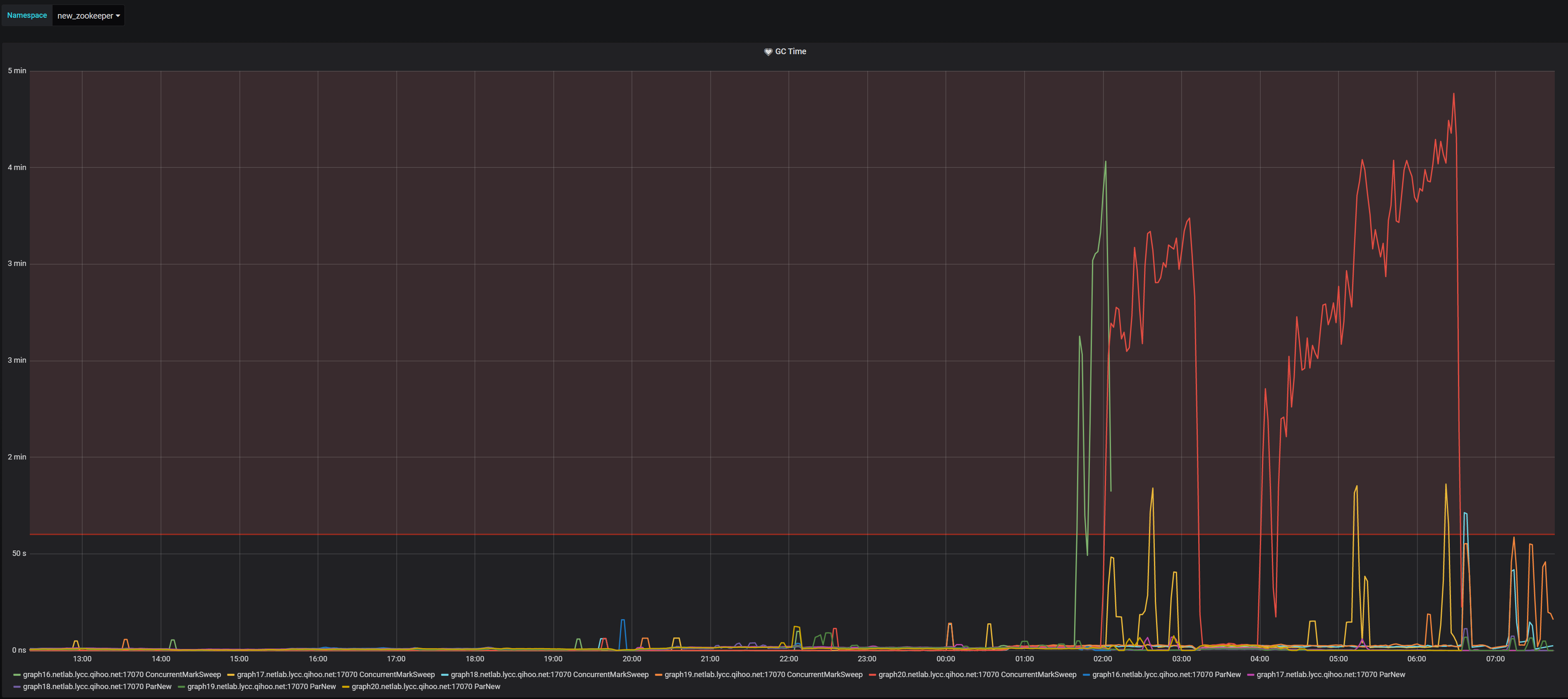

病症: 修改参数引发的血案,为了解决too many part 的问题我将parts_to_throw 由600 调整到2400 ,直接导致的后果是zookeeper 上数据量急剧上升 ,数据量上升4倍,临时节点数上升2倍

间接结果是导致zookeeper 在01:40 的时候引发zookeeper 的jvm 长时间gc很明显gc的时间是原来的几十倍,导致leader切换挨个挂掉后导致zookeeper 集群不可用

解决办法:

1 将parts_to_throw 改成1200

2 修改zookeeper的gc 策略将cms 改成G1 gc 后续考虑 ZGC

3 增加clickhouse 依赖的zookeeper集群

4 将这个引发too many part 的表重新设计

5 增加zookeeper gc的监控

6 简述: clickhouse 权限控制 readonly用户执行的过程中报错

症状:

Error running query: Code: 164, e.displayText() = DB::Exception: netlab-botnet-ch: Cannot execute query in readonly mode (version 20.8.4.11 (official build))诊断:

使用其它用户不会有相似问题,只有在readonly状况下才会有类似问题,这个用户配置了allow_database

新的版本里出现了_temporary_and_external_tables

解决:

在allow database 中添加

<allow_databases>

xxxx

<database>_temporary_and_external_tables</database>

</allow_databases>

本文由 妖言君 创作,采用 知识共享署名4.0 国际许可协议进行许可

本站文章除注明转载/出处外,均为本站原创或翻译,转载前请务必署名

最后编辑时间为: Mar 21, 2022 at 05:06 pm